FAQ: Can Artificial Intelligence Keep Our Deceased Loved Ones Alive in Digital Form?

FAQ

Approx read time: 12.2 min.

Digital Resurrection AI: 17 Realities & Risks for Families

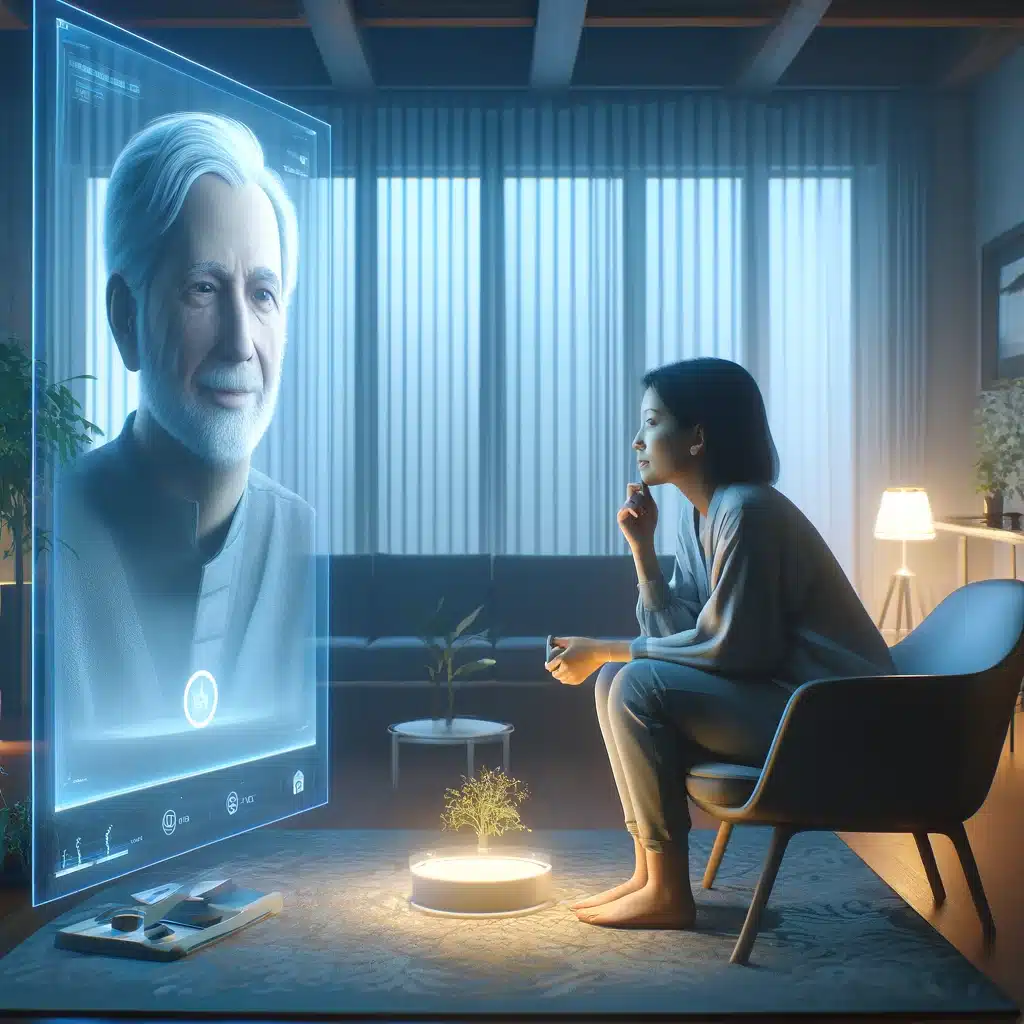

Digital resurrection AI is the idea that software can simulate a deceased person well enough to feel like a conversation, not just a memory. That might sound like sci-fi, but the building blocks already exist: large language models (GPT-style systems), voice cloning, and image generation.

Here's the honest truth: this technology can comfort some people, and it can mess with others. It can also create privacy nightmares if it's built from scraped texts, leaked voice notes, or old social media. So let's unpack how digital resurrection AI works, what it can realistically do today, and how to use it without turning grief into a business model that eats your family alive.

🧭 What “digital resurrection” actually means

Digital resurrection AI doesn't bring anyone back. It builds a probabilistic imitation based on data trails: messages, emails, photos, videos, and voice recordings. That imitation can respond in ways that resemble the person, because the system learns patterns from the materials you feed it.

In practice, digital resurrection AI shows up as:

- A chat persona that "talks like them"

- A cloned voice that reads messages in "their" tone

- A generated face or avatar that looks familiar

- A "memory app" that answers questions using recorded stories

The result can feel powerful, but it stays a simulation—always.

🧠 How large language models build a “speaking persona”

A GPT-style model doesn't store a person like a file. Instead, it predicts the next words based on patterns. When you fine-tune a model or build a "persona layer" around it, you guide it to respond in a specific style.

That style can come from:

- A private dataset (letters, journals, texts)

- Curated interviews with the person while alive

- Public posts (which introduces major consent issues)

This is why digital resurrection AI can feel "right" in tone while still being wrong in facts. The model is optimized to sound plausible, not to be spiritually or historically "true."

🎙️ Voice cloning: the leap from recordings to “voice fonts”

Voice cloning has moved fast. With enough clean audio, modern systems can mimic cadence, pitch, and phrasing. This is similar to the "voice font" concept described in discussions around posthumous chatbots. (Snopes)

That creates two very different outcomes:

- Memory-preserving: replaying the voice reading real letters or recorded stories

- Identity simulation: generating new sentences in their voice (higher emotional and ethical risk)

If you do anything in this space, label the audio clearly as synthetic. Your brain needs that guardrail.

🖼️ Images, avatars, and “lifelike” visuals

Image generation can now produce eerily convincing portraits and stylized avatars from a small photo set. Separately, video tools can animate a face to "speak" using lip-sync.

This is where families can get blindsided: once a face + voice exists, it's very easy for someone to:

- Deepfake a message to relatives

- Scam financial accounts ("Mom said to send money…")

- Create reputational harm with fake statements

So yes—digital resurrection AI can "look real," and that's exactly why you should treat it like a security-sensitive asset.

🧾 Where the data comes from (and why that matters)

Most digital resurrection AI projects rely on "digital exhaust," which includes:

- Text messages and chat logs

- Emails

- Social media posts and DMs

- Voicemails, videos, and recorded calls

- Photos and captions that contain personal context

If a company builds a product from public posts, it can still violate the spirit of consent. And if it builds from private materials without clear permission from the person (or estate), it's walking into a moral minefield.

🧪 Real-world example: Project December and grief chatbots

One of the most discussed examples involved a man who used an AI chatbot tool to simulate conversations with his deceased fiancée—often described as a "Jessica simulation" story. Reporting around the case shows how emotionally intense—and unpredictable—these experiences can be. (San Francisco Chronicle)

That example matters because it reveals the core danger: digital resurrection AI can rapidly become emotionally "sticky." You don't just use it—you can start needing it.

🕯️ Memory preservation services that try to “pre-consent” it

Not every company sells a ghost. Some sell structured memory capture: you record stories while alive, and loved ones later "ask questions" to hear those exact stories back.

HereAfter AI, for example, frames itself around interviewing and preserving stories in the person's own voice for later interactive playback. (HereAfter AI)

This approach is generally safer than building a free-form "personality simulator," because:

- The content stays grounded in recorded stories

- The person can consent while alive

- The system can avoid inventing brand-new "beliefs" or "confessions"

🧩 The uncomfortable truth: it’s a simulation, not a soul

You'll see marketing that implies continuity: "talk to them again," "keep them with you," "never say goodbye." That's emotionally persuasive… and potentially harmful.

Digital resurrection AI can mimic tone, but it can also:

- Hallucinate memories

- Invent opinions

- "Agree" with you to keep you engaged

- Drift into content the person never would have said

If you treat it like a memory interface, you stay safer. If you treat it like the person, you invite pain.

❤️ Why some people still find comfort in digital resurrection AI

Used carefully, digital resurrection AI can support grief in a few specific ways:

- Story access: kids can hear a grandparent's stories again

- Legacy organization: families preserve scattered memories in one place

- Prompting reminiscence: the tool sparks real memories you forgot

- Ritual support: anniversaries, memorial days, family history moments

This can work best when the output is constrained to real recorded content, and when you keep it as a supplement—not a replacement for mourning.

🥶 The darker side: dependency, distortion, and “digital hauntings”

Researchers have warned that these systems can create psychological harm without proper safety standards—especially if they surprise users, target children, or push engagement like a social app. (University of Cambridge)

Common harm patterns include:

- Dependency: using the bot instead of processing loss

- Confusion: feeling guilty for "moving on"

- Distortion: believing the bot's invented memories

- Intrusion: unwanted reminders at bad moments ("haunting")

If you're already vulnerable, a chatbot that talks back can intensify the loop.

🔐 Security risks: scams, deepfakes, and identity theft

Once you build a convincing voice/face model, you've basically created a high-grade impersonation tool. That can be abused by:

- Scammers targeting relatives

- Bad actors seeking account access

- "Revenge" deepfakes that damage reputations

If you must experiment with digital resurrection AI:

- Don't store raw voice datasets in random cloud drives

- Don't share generated voice clips publicly

- Don't allow the bot to give financial instructions

- Add a private "family verification phrase" for real-life requests

This is the part people ignore—until a scam hits.

⚖️ Consent after death: who gets to say “yes”?

The biggest ethical problem is consent. Many people never explicitly said, "Yes, build a synthetic me." That leaves families guessing, and companies profiting.

There's active discussion around posthumous privacy rights, and how unclear the legal and ethical landscape remains when a person's data outlives them. (IAPP)

If you want a practical standard:

- Best: explicit written consent while alive

- Next best: estate/POA authority + clear family agreement

- Worst: public scraping + "we assume it's fine"

🧑⚕️ What experts are warning about (in plain language)

University of Cambridge researchers have called for safeguards to prevent unwanted emotional harm and exploitative "hauntings" in the growing digital afterlife market. (University of Cambridge)

Separately, major reporting highlights how easy it is for people to form attachments to "deathbots," and how that can interfere with normal grieving when the product optimizes for engagement. (The Guardian)

Translation: if the business model rewards "time spent," it may reward emotional dependence.

🧱 Guardrails that make digital resurrection AI less dangerous

If this industry wants to be taken seriously, it needs defaults that protect people, not hook them.

Strong guardrails include:

- Hard transparency: always label it as synthetic

- Restricted modes: "story playback" mode vs "free chat" mode

- No ads: grief + ads is morally gross

- Age limits: keep kids out of free-form deathbots

- Off switch: easy deletion + clear data destruction options

- No medical/legal advice: the bot should refuse those roles

These aren't "nice to have." They're the difference between memorial tech and emotional exploitation.

🧾 A practical family checklist before you try digital resurrection AI

If your family is considering digital resurrection AI, use this checklist first:

- Get consent in writing (if possible, while the person is alive)

- Define the purpose (legacy stories? closure ritual? family history?)

- Choose the safest mode (recorded stories > free-form simulation)

- Set time boundaries (example: 10 minutes weekly, not nightly)

- Pick a steward (one person manages access and settings)

- Keep the data private (no public sharing of generated voice/video)

- Plan for harm (if it feels worse, stop immediately)

- Protect kids (don't let children "bond" with a bot version of a parent)

And if grief feels crushing, involve a real human professional. A chatbot should never become your main support system.

🔮 Where this is heading: “grief tech” is getting mainstream

This isn't staying niche. Big media has covered the rise of grief-tech products and documentaries exploring how startups sell "digital immortality." (Variety)

There are also new apps sparking fresh backlash for pushing the "talk to dead relatives" concept into glossy marketing. (EW.com)

Expect the next wave to include:

- Better voice/face realism with less data

- "Legacy plans" bundled with estate planning services

- More regulation pressure (especially around consent and minors)

- A split market: safe archival tools vs high-emotion simulators

✅ Conclusion: a sane way to approach this new frontier

Digital resurrection AI can preserve stories and voices in ways photo albums never could. It can also create emotional traps, privacy disasters, and fake "messages" that confuse the living.

If you take one rule from this article, take this: use digital resurrection AI to preserve memories, not to replace mourning. Keep it transparent, limited, and consent-driven. That's how you honor someone without turning them into a product.

If you want help thinking through the privacy, data-handling, or "what should we save and how?" side of this, reach out via Contact or open a request at Helpdesk Support. If grief stress is affecting sleep and daily life, consider resources on Health as well.

📊 Comparison Table: Safer Memorial Tech vs “Deathbots”

| Approach | What It Does | Main Risk |

|---|---|---|

| Story archive (recorded interviews) | Plays back real stories in the person's voice | Low: limited invention, clearer consent |

| Persona chatbot (free-form) | Generates new replies "in their style" | High: dependency + hallucinated memories |

| Voice + face avatar | Simulates video/voice presence | Very high: deepfake scams + identity misuse |

❓ Frequently Asked Questions

❓ What is digital resurrection AI?

Digital resurrection AI is technology that uses data (texts, voice, photos) to simulate a deceased person's conversational style.

❓ Does digital resurrection AI actually bring someone back to life?

No. It generates a simulation that can sound convincing, but it isn't the person.

❓ How does digital resurrection AI learn someone's "voice"?

It uses voice cloning models trained on recordings to reproduce tone, pacing, and accent.

❓ How much data do you need to build a decent simulation?

More is safer and more accurate, but some tools can mimic with surprisingly little—especially for voice and visuals.

❓ Is it ethical to build a chatbot of a deceased loved one?

It depends on consent, transparency, and intent. Explicit permission makes a huge difference.

❓ Who should give consent if the person is already gone?

Ideally the estate representative, with family agreement, and clear boundaries about what's created and shared.

❓ Can digital resurrection AI hallucinate fake memories?

Yes. Language models can invent details unless you restrict them to verified recordings and sources.

❓ Can it harm the grieving process?

It can. Some experts warn it may create dependency or interfere with acceptance if it replaces real mourning.

❓ Is it safe for children to use a "deathbot"?

Generally, no. Kids can form strong attachments and confusion, so adult-only restrictions make sense.

❓ Could scammers use a cloned voice to trick family members?

Yes. Voice cloning raises real fraud risks, especially if clips get shared publicly.

❓ What's the safest "version" of this technology?

A curated story archive created with consent while the person is alive, with limited interactive playback.

❓ Are there services designed for memory preservation rather than simulation?

Yes—some services focus on recorded interviews and story retrieval instead of free-form persona generation. (HereAfter AI)

❓ Can digital resurrection AI be used for historical figures too?

Yes, but it's still an imitation and can easily reflect bias or invented "quotes."

❓ What should families do before they try it?

Agree on purpose, set time limits, choose a restricted mode, and keep the data private.

❓ What's the biggest red flag in a product like this?

Marketing that promises you'll "never have to say goodbye," especially if it also pushes subscriptions and engagement.

❓ Can you delete a digital persona once it's created?

Sometimes, but you should demand clear deletion policies and data destruction options before uploading anything.

❓ Should these tools have regulation?

Many researchers argue yes—especially around transparency, consent, and preventing harm.

❓ What's a healthy way to use digital resurrection AI?

Treat it like a memorial tool for stories and remembrance, not a replacement relationship.

❓ What if interacting with it makes me feel worse?

Stop immediately and talk to a trusted person or professional support. The tool should never trap you.

❓ Will digital resurrection AI become normal in the next few years?

It's trending that way, and public debate is increasing as more products and documentaries appear. (Variety)

🧩 FAQ Schema (JSON-LD)

📚 Sources & References

- University of Cambridge: safeguards for AI "deadbots" (University of Cambridge)

- Snopes: Microsoft patent for person-based chatbots (Snopes)

- IAPP: privacy rights after death and chatbots (IAPP)

- San Francisco Chronicle: Project December "Jessica simulation" (San Francisco Chronicle)

- HereAfter AI: interactive memory app (HereAfter AI)

- The Guardian: digital resurrection—fascination and fear (The Guardian)

Related Videos:

Related Posts:

Happy Easter: Celebrating together Milton and Burlington

DeepSeek vs. ChatGPT: Which AI Dominates in 2026 — Enterprise Efficiency or Global Creativity?

How can dietary choices impact the prevention and management of dementia and Alzheimer’s disease?

Patient Health Questionnaire (PHQ-9)

Do I suffer from Alpha-1 Antitrypsin (AAT) Deficiency?

Digital Resurrection: How AI Breathes Life into Memories of the Deceased

Unlocking the Power of Joy: 8 Proven Ways to Boost Happiness and Achieve Your Goals